The COVID-19 pandemic halted many states’ annual testing programs in spring 2020. As a result, states and school districts are relying on interim assessments to better inform needed changes in instruction and address learner readiness.

Increasingly, district leaders are turning to Cognia™ to determine whether assessments and test items are equitable, and whether they provide valid information about how well students are making progress toward standards. Often, district officials want to be sure that the data they collect from their assessments accurately reflects what is going on in the classroom.

Cognia’s Assessment Review Service helps educators evaluate locally created assessments to answer these questions:

- Do the assessments contain inequitable items?

- Are the assessments collecting the right evidence that supports what educators think is happening?

- If the evidence conflicts with their expectations, why?

Specifically, the reviews help determine if tests are free of racial, gender, and socio-economical bias.

Nick Baughman, associate superintendent of learning and instruction at Yorkville (IL) District 115, came to Cognia in spring 2020 out of concern that the district’s assessments had “no quantifiable or qualitative way to ensure that assessments were free of bias.”

The district had developed its own local assessments based on its curriculum, which were validated after the first administration and adjusted based on academic responses. But, Baughman notes, “Little consideration was given to local assessment bias, and disaggregation of the data by sub-group routinely took place during benchmark assessments, which only happened three times annually.”

Cognia’s review “exposed the low level of cognitive complexity and difficulty contained in local core content area assessments,” Baughman says. “Using Webb’s Depth of Knowledge vocabulary as a frame of reference, it became crystal clear that our local assessments allowed us to effectively understand student levels of recall and reproduction, yet not necessarily short-term strategic thinking.”

Baughman says that the district has struggled with a demographic achievement gap (ethnic and economic) for years.

“Until the data exposed the academic differences, bias and equity were not part of the conversation. Before we analyzed actual data, the achievement gap was suspected and not widely accepted as actual. After reflection and review, it became apparent that bias, sensitivity, and equity needed to become part of the discussion. School improvement teams and district improvement teams now write, vocalize, and follow through on actions that are intentionally aligned to closing the achievement gap.”

Equity and Universal Design

Cognia’s expert reviewers identify test items that are accessible and not accessible to all students. They help educators recognize the connection of test items to curriculum and instruction by emphasizing that assessment items need to reflect learning outcomes and be grade appropriate.

The reviews are based on five key components of a high-quality assessment: test design and structure, stimuli, test items, item alignment to standards, and scoring materials.

The review flags items that expose students to stereotypical information and micro-aggression.

Specifically, the reviews help determine if tests are free of racial, gender, and socio-economical bias. The review flags items that expose students to stereotypical information and micro-aggression. Such items lower efficacy and create unintended emotional distraction. The review also evaluates whether the assessments are readable and accessible for students with disabilities and learning exceptionalities and for non-native English speakers—all of whom may not be strong readers but receive no special accommodations.

Cognia helps ensure that educators understand what equitable assessments look like and are familiar with the processes that help continually refine their assessment system. By selecting examples directly from the district’s assessments and demonstrating how to improve or repair items, administrators and teachers learn how to adjust their assessments. Item-by-item feedback helps educators make immediate improvements to existing assessments and ensure quality in those being developed or revised.

Until the data exposed the academic differences, bias and equity were not part of the conversation. Before we analyzed actual data, the achievement gap was suspected and not widely accepted as actual.

Common challenges

Common themes emerge across many districts’ assessments. Reviewers often find:

- Items that came from assessment banks aligned to previous standards

- Unnecessary use of male pronouns “he/him” in questions that refer to occupations in health science and other fields

- Subject matter that revives trauma, as when items refer to recent hurricanes or floods that may remind a student of personal loss or danger

The best testing practice also avoids mention of racial issues. In all subject areas, those issues are best raised in classroom discussions where there is a teacher to mediate the discussion.

The review also evaluates whether the assessments are readable and accessible for students with disabilities and learning exceptionalities and for non-native English speakers—all of whom may not be strong readers but receive no special accommodations.

Finally, reviewers often find that educators do not understand Universal Design for Assessment and that scoring materials provide insufficient information to guide the teachers to consistently score student work.

Interim assessments are especially important now to help inform instruction and to determine how to help students make up for lost learning due to the pandemic. To be valid and equitable, those assessments need to take into account the considerations we’ve described.

From our experience, writing good test items starts by thinking about the purpose of the assessment and what evidence of learning the assessment is trying to elicit. This backwards design process focuses on revealing student misconceptions and identifying whether there is a gap in understanding or a gap in the curriculum or instruction.

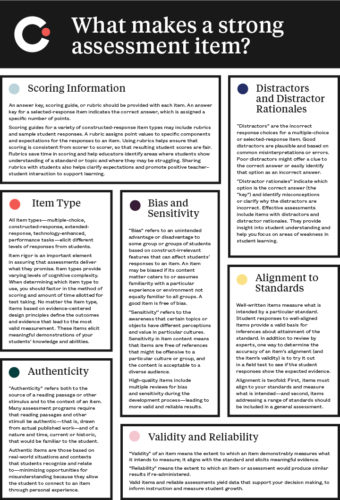

Interested in learning more about what makes a strong assessment item? Download the infographic.

For more information on Monitoring Equity in Assessments/Assessments Items, watch the on-demand webinar.

© Cognia Inc.

This article may be republished or reproduced in accordance with The Source Copyright Policy.

The information in this article is given to the reader with the understanding that neither the author nor Cognia is in engaged in rendering any legal or business advice to the user or general public. The views, thoughts, and opinions expressed in this article belong solely to the author(s), and do not necessarily reflect the official policy or position of Cognia, the author’s employer, organization, or other group or individual.